AI in Higher Education: Balancing Automation and Connection

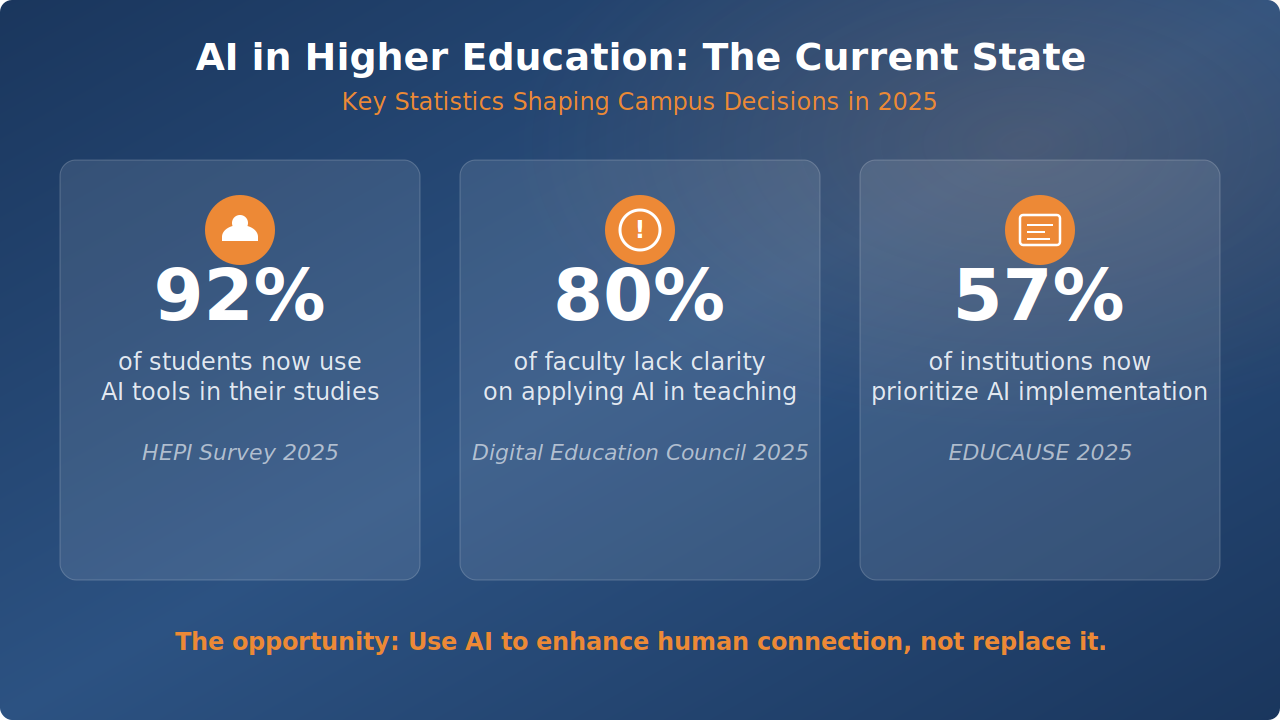

AI adoption in higher education has surged, with 92% of students now using AI tools. But institutions must balance technological efficiency with authentic human connection.

- Students report concerns about diminished emotional support from AI-only interactions.

- Successful institutions use AI to free staff for high-impact mentoring, rather than replacing them.

- Predictive analytics and personalized outreach work best when paired with empathetic human follow-up.

- Only 17% of faculty globally report advanced AI proficiency, while 80% say they lack clarity on how to apply AI in teaching.

Institutions that position AI as an amplifier of human care, rather than a substitute, will see the greatest gains in engagement and retention.

The conversation around AI in higher education has shifted. Two years ago, campus leaders debated whether to allow AI tools in classrooms. Today, the question is how to implement AI without losing the human connections that define transformative education. According to the World Economic Forum, the secret to success in education is ensuring students feel safe, cared for and part of a learning community.

This tension sits at the heart of every technology decision institutions make. Students want personalized, responsive experiences. Staff members are stretched thin and budgets demand efficiency. Yet the relationships between students, faculty and advisors remain the foundation of persistence, belonging and long-term success. Getting this balance right will separate institutions that thrive from those that struggle to retain learners.

Why Is AI in Higher Education Growing So Rapidly?

A survey found that 92% of UK students now use AI in some form, an increase from 66% in 2024. Meanwhile, 57% of higher ed institutions are prioritizing AI implementation.

Ongoing staffing challenges across higher ed mean fewer people are doing more work. Administrative tasks consume hours that could be spent advising students or developing innovative programs. Students arrive on campus with sophisticated expectations shaped by personalized experiences from streaming platforms, retail and social media. They expect their institution to know them, anticipate their needs and communicate in ways that feel relevant rather than generic.

The promise of AI lies in meeting these expectations at scale.

- Chatbots can simultaneously answer thousands of routine questions.

- Predictive models can identify struggling students before they fail.

- Automated systems can send personalized reminders about deadlines, opportunities and resources.

Each of these capabilities addresses real pain points that institutions have wrestled with for decades.

What Are Students Actually Using AI For?

Student adoption extends far beyond the ChatGPT conversations that initially sparked campus debates about academic integrity. Students use AI to explain complex concepts, summarize lengthy articles and generate research ideas. The primary motivation is straightforward: 51% of students say they use AI to save time, while 50% report using it to improve the quality of their work.

Communication with institutions is another growing use case. Students expect instant responses to their questions about financial aid, registration and campus resources. When institutions effectively implement AI-powered chatbots, students gain 24/7 access to accurate information without waiting for office hours or navigating phone trees. This convenience matters especially for non-traditional students managing multiple responsibilities alongside their education.

What Are the Risks of Over-Automating the Student Experience?

The efficiency gains from automation come with trade-offs that deserve serious consideration. Research published in SAGE journals examined the impact of AI chatbots on human connection among higher ed students. The findings revealed that while students acknowledge benefits like personalized assistance and improved access to information, they express concerns about diminished emotional support when interacting primarily with automated systems.

Higher levels of chatbot interaction correlate with increased concerns about losing human connection. This finding should give pause to any institution racing to automate every touchpoint in the student journey. Beyond seeking information, students want and expect relationships, guidance and the sense that someone genuinely cares about their success. Automation that strips away these personal elements may boost efficiency metrics while undermining the belonging that drives persistence.

How Does Impersonal Communication Affect Retention?

The connection between belonging and retention is well established. When students feel seen, supported and connected to their institution, they persist through challenges that might otherwise cause them to leave. Generic mass communications work against this goal.

When every student receives identical emails about registration deadlines or campus events, they learn to ignore institutional messages entirely. This practice creates a trust deficit that becomes difficult to reverse.

Consider the difference between a mass email blast and a message that references a student's specific degree progress, flags a potential prerequisite issue and suggests courses aligned with their stated career interests. Both require approximately the same amount of staff time when powered by intelligent systems. But only one builds the relationship that keeps students engaged through their entire educational journey.

Institutions achieving strong student engagement outcomes understand that personalization must feel genuinely personal, even when delivered through automated channels.

What Does Human-Centered AI Look Like in Practice?

Human-centered AI in higher education flips the traditional automation narrative. Instead of asking "what can we automate?" it asks, "how can technology help people improve on their most important work?" This framework positions AI as an amplifier of human connection rather than a replacement for it. The goal is more meaningful interactions that happen at pivotal moments.

The EDUCAUSE Review highlights institutions creating synergistic models where AI-driven tools work alongside student success centers and academic support teams. Cross-functional teams use AI to monitor potential obstacles and deploy personalized interventions while maintaining the human relationships that students need to thrive. This collaborative approach treats technology as infrastructure that enables better human judgment rather than replaces it.

How Can AI Free Staff for Meaningful Interactions?

The strongest use case for AI in student services is liberating staff from repetitive tasks so they can focus on work that requires empathy, judgment and genuine human connection. When chatbots handle routine questions about office hours, application deadlines and transcript requests, advisors gain time for conversations about career goals, personal challenges, and academic planning that shape a student's trajectory.

AI-powered systems can reduce time spent on administrative tasks, allowing educators to redirect those hours toward mentoring and course design. We can draw a parallel to the "spreadsheet moment" in business, when automation freed professionals from routine calculations and enabled focus on higher level strategy.

AI offers the same opportunity for education. It can lift administrative burdens so that everyone, from admissions counselors to academic advisors, can spend more time on interactions that actually change lives.

How Do Predictive Analytics Support Proactive Care?

Early warning systems are one of the most promising AI applications for student success. To more efficiently identify at-risk students, modern predictive models analyze:

- Attendance patterns

- Assignment submissions

- Engagement metrics

- Demographic factors

This capability enables proactive retention strategies that reach students when intervention can still make a difference.

The power lies in timely, personalized response. When an algorithm flags a first-year student showing concerning patterns, the system doesn't send a generic "check in with your advisor" message. Instead, it routes specific insights to the appropriate staff member, who can then reach out with context about the student's situation.

- The AI identifies the need.

- The human provides the care.

This combination of technological intelligence and human empathy creates support systems that feel both responsive and genuine.

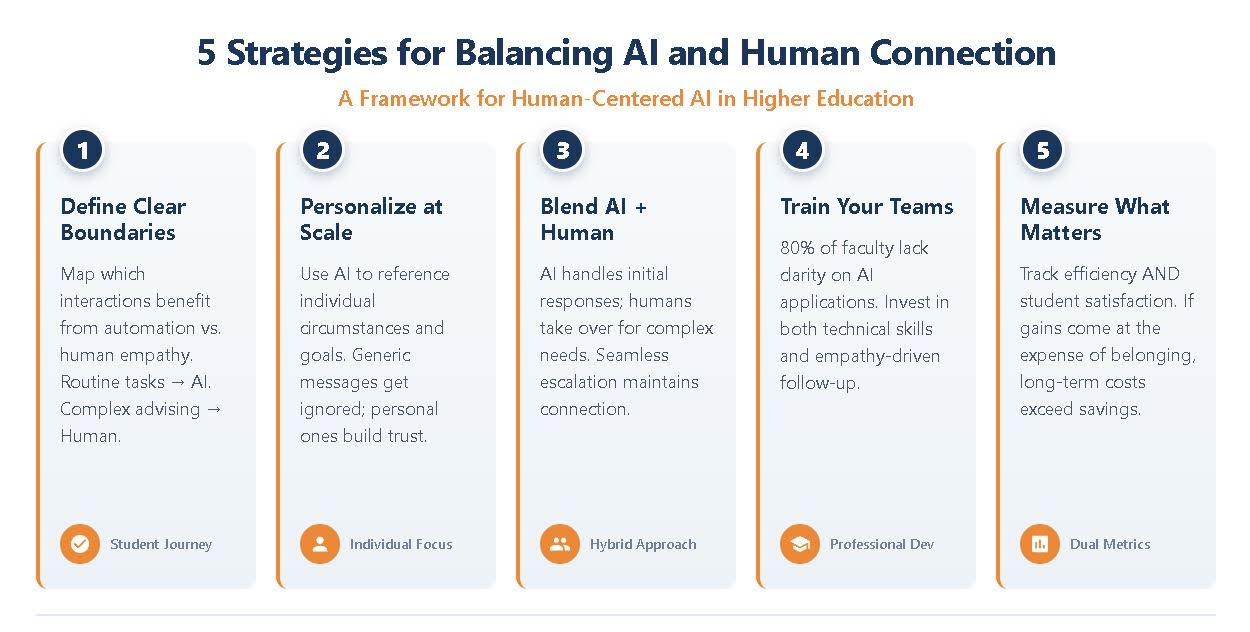

5 Strategies for Balancing Automation and Human Connection

Implementing human-centered AI in higher education requires intentional design choices at every stage. The following strategies help institutions maximize efficiency gains while preserving the relationships that drive student success.

- Define clear boundaries between AI and human touchpoints. Map the student journey and identify which interactions benefit from automation versus which require human judgment and empathy. Routine information requests, deadline reminders and scheduling tasks often work well with AI. Complex advising conversations, crisis intervention and relationship-building moments demand human attention.

- Use AI-powered personalization to enhance communication. Generic messages get ignored. Personalized outreach that references individual circumstances, academic progress and stated goals builds trust and engagement. AI enables this personalization at scale, but the content should still feel like it comes from people who know and care about each student.

- Implement blended messaging approaches. The most effective communication strategies combine AI efficiency with human empathy. AI handles initial responses and routine follow-ups while seamlessly escalating complex conversations to appropriate staff members. Students receive immediate acknowledgment of their needs alongside the human connection they require for deeper support.

- Train staff on AI tools and empathy-driven follow-up. Comprehensive training should cover both technical capabilities and best practices for maintaining human connection within AI-augmented workflows. According to the Digital Education Council's Global AI Faculty Survey, 40% of faculty are beginners in AI literacy, only 17% report advanced proficiency, and 80% feel they lack clarity on how AI can be applied.

- Measure both efficiency metrics and student satisfaction. Tracking response times and cost savings tells only part of the story. Institutions must also monitor student satisfaction, sense of belonging and perception of institutional care. If efficiency gains come at the expense of student experience, the long-term costs will far exceed short-term savings.

How Should Institutions Approach AI Governance and Ethics?

Using AI in higher education responsibly requires thoughtful governance that addresses privacy, bias, transparency and accountability. Students entrust institutions with sensitive personal information. Using that data to power predictive models and personalized communications creates obligations around consent, security and ethical use.

Algorithmic bias presents particular challenges. If AI systems are trained on historical data that reflects existing inequities, they may perpetuate or amplify those patterns. An early warning system that disproportionately flags students from certain demographic groups could lead to differential treatment that undermines rather than supports equity goals. Regular auditing and diverse perspectives in system design help mitigate these risks.

What Policies Support Responsible AI Adoption?

Clear institutional policies establish expectations for how AI tools will and won't be used in student services. These guidelines should address data governance, informed consent, transparency about when students are interacting with automated systems and protocols for human oversight of AI-generated recommendations. Students are more likely to trust AI-enhanced services when they understand how their data is being used and have confidence that humans remain accountable for decisions that affect them.

Professional development ensures that policies translate into practice. Staff members need ongoing training, not only in using AI tools, but in recognizing their limitations and knowing when human judgment must override algorithmic suggestions. Building a culture of comprehensive student engagement means ensuring that technology serves institutional values rather than driving decisions that conflict with them.

Frequently Asked Questions

What is human-centered AI in higher education? Human-centered AI refers to technology implementations that prioritize enhancing human capabilities rather than replacing human interactions. In higher ed, this means using AI to handle routine administrative tasks, identify students who need support and personalize communications at scale, while preserving meaningful human relationships between students, faculty and staff. The goal is freeing people to do work that requires empathy, judgment and genuine connection.

How can AI improve student retention without replacing human advisors? AI improves retention by identifying at-risk students earlier and enabling more timely, personalized interventions. Predictive analytics can flag concerning patterns weeks before traditional indicators would surface, giving advisors time to reach out proactively. AI also handles routine communications and information requests, creating more time for advisors to have substantive conversations about goals, challenges and academic planning. The technology enhances advisor effectiveness rather than substituting for their expertise and care.

What are the biggest risks of AI in student services? The primary risks include:

- Over-automation that diminishes human connection

- Algorithmic bias that perpetuates existing inequities

- Privacy concerns around student data

- Erosion of trust when students feel they're interacting with impersonal systems

Institutions can mitigate these risks through clear governance policies, regular auditing of AI systems, transparency with students about how technology is being used and maintaining human oversight of AI-generated recommendations and interventions.

Transform Your Student Experience With Human-Centered Technology

AI adoption is about using intelligent systems to create space for more meaningful human connections at scale. When technology handles routine tasks, staff members can focus on the mentoring, advising and relationship-building that transforms student outcomes. When predictive analytics identify students who need support, caring professionals can intervene before challenges become insurmountable.

Modern Campus empowers institutions to achieve this balance through solutions designed to enhance student engagement while preserving the personal connections that matter most. From AI-enhanced messaging platforms to personalized web experiences and comprehensive engagement tracking, the right technology amplifies your team's ability to support every learner throughout their journey.

Request a demo to discover how human-centered technology can transform your institution's approach to student success.

Last updated: February 4, 2026